Tencent Debuts More Cost-effective LLM to DeepSeek-R1

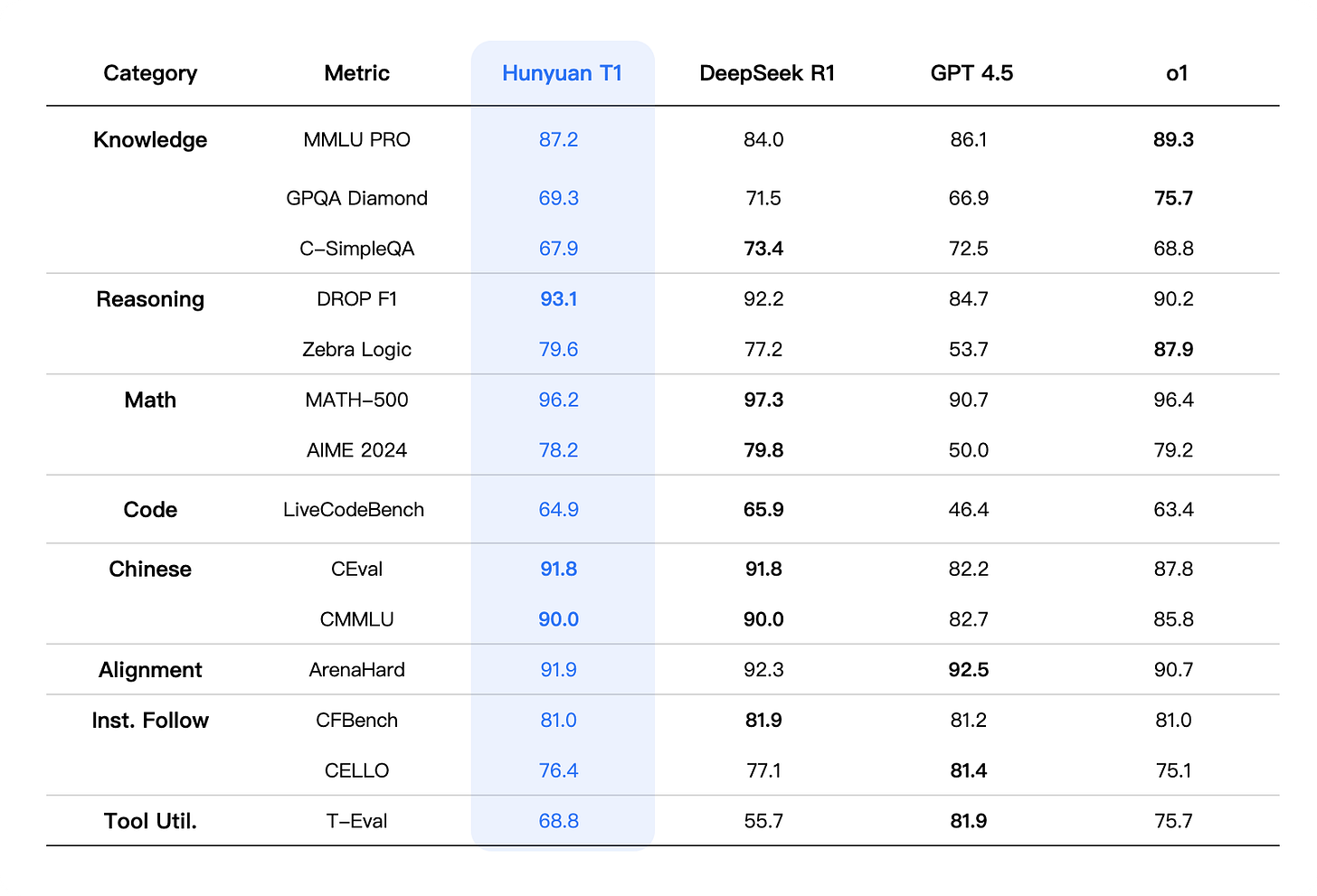

Benchmark results place Hunyuan-T1 ahead in key AI performance areas, priced at one-quarter of DeepSeek-R1's standard time rate

Reinforcement learning (RL) continues to revolutionize the AI landscape, particularly in refining large language models (LLMs) post-training.

Following recent significant launches such as Baidu's ERNIE-X1, Tencent has now officially unveiled Hunyuan-T1, positioning it as a superior, more affordable alternative to DeepSeek R1.

Hunyuan-T1 is the industry’s first ultra-large-scale Hybrid-Transformer-Mamba mixture-of-experts (MoE) model specifically optimized for advanced reasoning tasks.

Tencent builds upon the earlier success of Hunyuan-T1-Preview, enhancing inference capabilities significantly to align closely with human thought processes and user preferences.

The core innovation behind Hunyuan-T1 is Tencent’s proprietary TurboS base, the pioneering large-scale Hybrid-Transformer-Mamba MoE architecture first introduced in March.

TurboS addresses critical challenges such as context loss and long-distance information dependency, enabling more efficient long-text context processing. The optimized architecture achieves up to twice the decoding speed of comparable models under similar deployment conditions, resulting in lower operational costs.

Employing advanced training techniques, including curriculum learning strategies and phased policy resets, Tencent improved token efficiency and training stability by over 50%.

Keep reading with a 7-day free trial

Subscribe to China Innovation Watch to keep reading this post and get 7 days of free access to the full post archives.