Tencent has unveiled its next-generation fast-reasoning AI model, Hunyuan Turbo S, setting a new benchmark for real-time responsiveness in large language models (LLMs).

Officially launched on February 27, 2025, Turbo S is now available on Tencent Cloud via API calls and is rolling out for public testing on Tencent Yuanbao, the company's AI-powered chatbot platform.

Turbo S: Speed and Intelligence Redefined

Traditional AI models such as DeepSeek R1 and Hunyuan T1 rely on slow reasoning, processing information before generating responses to ensure logical coherence.

In contrast, Turbo S leverages fast-thinking mechanisms, significantly enhancing response speed. The model delivers a 2x increase in word generation speed, reducing initial word latency by 44%—a major breakthrough in real-time AI interactions.

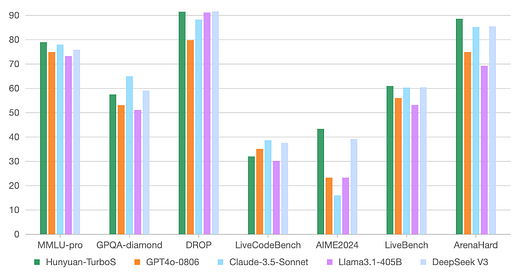

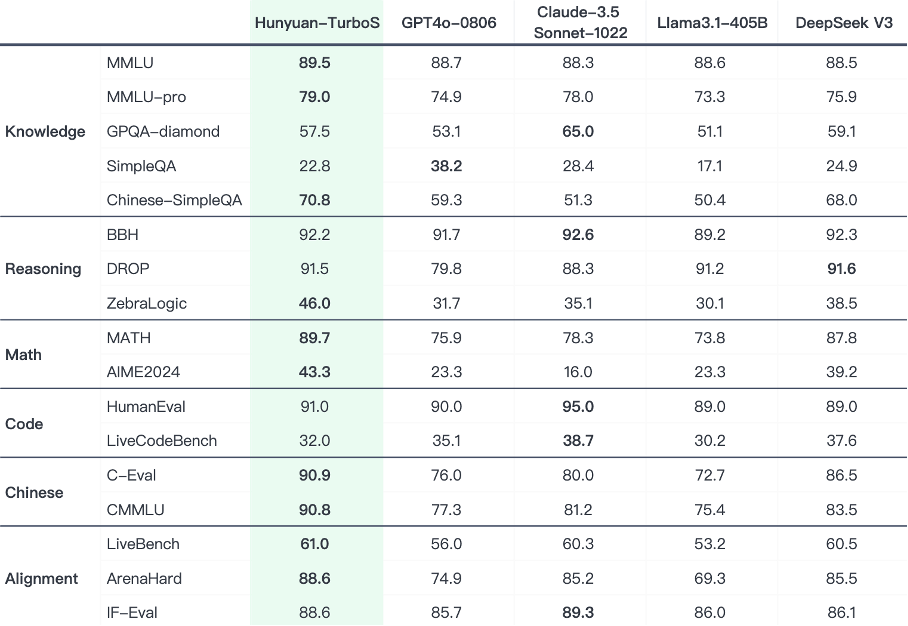

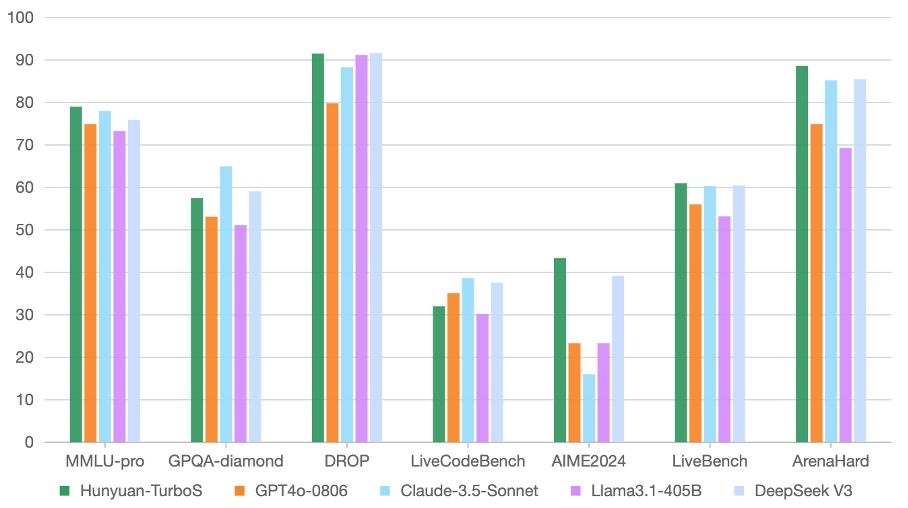

Beyond speed, Turbo S excels in knowledge retention, mathematical reasoning, and creative generation, competing with industry leaders like DeepSeek V3, GPT-4o, and Claude 3.5 in multiple benchmark tests. This evolution in AI design marks Tencent’s ambition to bridge the gap between human-like intuition and analytical reasoning.

The Science Behind Fast Thinking AI

Cognitive science suggests that human decision-making is 90%-95% intuition-driven—a phenomenon closely aligned with Turbo S’s fast-thinking model.

This approach enables AI to provide immediate, intuitive responses while still integrating slow-thinking capabilities for more complex reasoning tasks. By combining these two cognitive strategies, Tencent is shaping AI to become more intelligent and efficient in problem-solving.

Hybrid-Mamba-Transformer: The Future of AI Model Architecture

One of the most striking innovations in Turbo S is its Hybrid-Mamba-Transformer fusion architecture. Unlike conventional Transformer-based models, which suffer from high computational costs in long-text processing, this hybrid approach optimizes memory and computing efficiency. Key advantages include:

Lower computational complexity compared to standard Transformer models

Reduced KV-Cache memory footprint, enabling cost-effective training and inference

Seamless integration of Mamba's long-sequence processing capabilities with Transformer’s deep-context comprehension

This industry-first application of Mamba architecture in a massive Mixture of Experts (MoE) model positions Tencent as a pioneer in scalable, efficient AI.

Practical Applications and Cost Efficiency

As a flagship model, Turbo S will serve as the foundation for Tencent's future AI developments, supporting specialized models for reasoning, long-form content generation, and code completion.

Additionally, Tencent is enhancing its reasoning capabilities with Hunyuan T1, a model designed for complex problem-solving, now available on Tencent Yuanbao.

For developers and enterprises, Turbo S is now accessible on Tencent Cloud via API, offering a one-week free trial. The pricing model is highly competitive:

Input cost: ¥0.8 per 1 million tokens

Output cost: ¥2.0 per 1 million tokens

This represents a significant cost reduction compared to previous Hunyuan Turbo models, making advanced AI more accessible for businesses and developers.

What This Means for the AI Landscape

The launch of Hunyuan Turbo S signals Tencent’s push to democratize large AI models, making them faster, smarter, and more cost-efficient.

With its innovative hybrid architecture and real-time processing capabilities, Turbo S challenges the status quo of AI-powered applications, setting a new industry benchmark for speed and intelligence.

For global tech enthusiasts and developers, this raises an important question: Could fast-thinking AI models like Turbo S reshape the way we interact with AI-driven tools in business, education, and everyday life?

As AI continues to evolve, Tencent’s latest innovation reaffirms that the next frontier in AI development isn't just about generating better responses—it’s about delivering them in real time.